How can we resolve crawled but not indexed error in google console

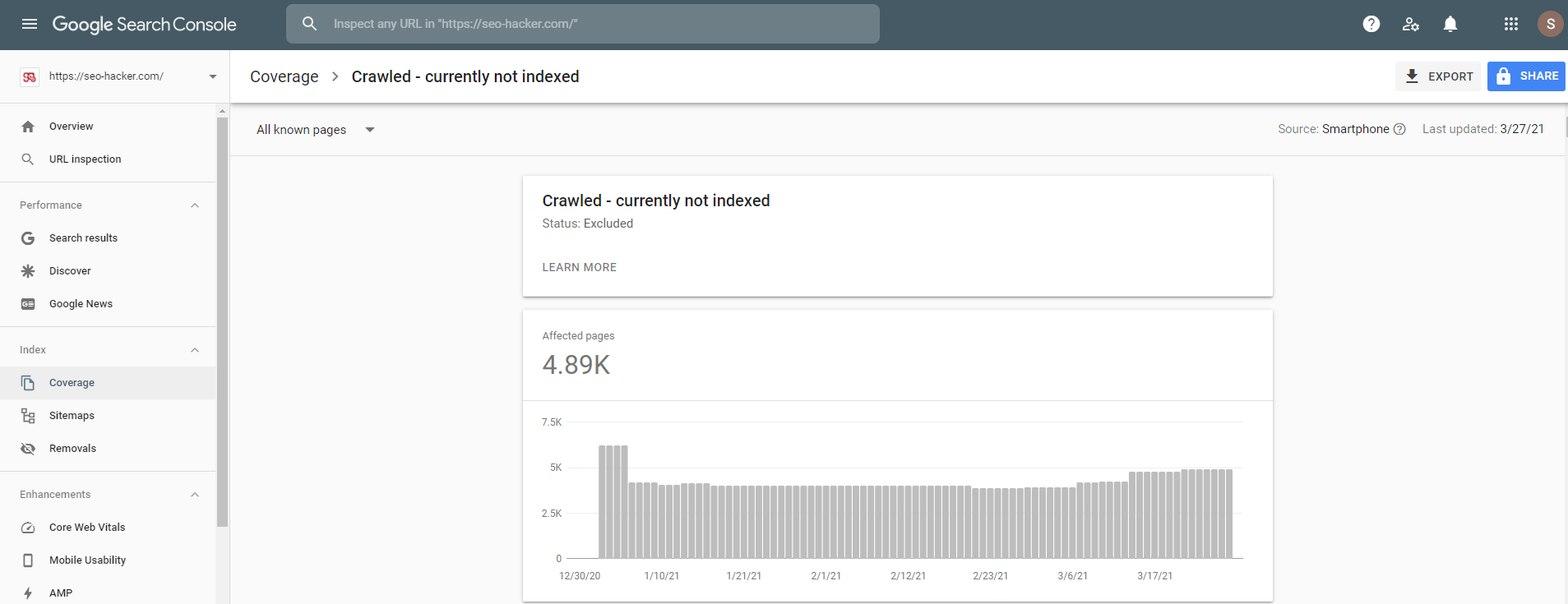

One of the most frustrating errors that can occur in Google Console is the “crawled but not indexed” error. This means that Google has found your website or blog, but for some reason, it is not indexing your content. There are a number of potential causes for this error, but fortunately, there are also a number of ways to fix it. In this blog post, we’ll explore some of the most common causes of the crawled but not indexed error and how to resolve them.

What is the crawled but not indexed error in Google Console?

If you’re a webmaster, you’ve probably seen the “crawled but not indexed” error in Google Console at some point. This error means that Google has attempted to crawl a page on your website, but for some reason, was unable to add it to their index.

There are a number of possible reasons for this error, including:

The page is blocked by robots.txt: If your website has a robots.txt file, it may be blocking Google from crawling the page. To check if this is the case, try opening the page in a browser with the “robots.txt” tester tool.

The page is not well-formed: If the page isn’t well-formed, meaning it doesn’t follow proper HTML syntax, Google may have trouble indexing it. You can use the W3C Markup Validation Service to check if your pages are well-formed.

The page uses unsupported technologies: If your pages use technologies that aren’t supported by Google (like Flash), they may not be able to index them properly. Try converting your pages to HTML5 using Google’s Web Designer tool.

Once you’ve identified the reason why your pages aren’t being indexed, you can take steps to fix the issue and resubmit your pages to Google for indexing.

How can we resolve the crawled but not indexed error?

If you’ve been paying attention to your Google Search Console lately, you may have noticed the “Crawled, but not indexed” error. This error means that Googlebot has crawled your site, but for some reason, the pages are not being indexed. While this can be frustrating, there are a few potential resolutions.

First, check to see if the pages in question are blocked by robots.txt. If they are, then unblock them and resubmit the sitemap to Google.

Second, check to see if the pages are properly formatted and structured. If they’re not, then make the necessary changes and resubmit the sitemap.

Third, check to see if there are any external links pointing to these pages. If there are, then try contacting the webmaster of that site and ask them to remove the link. Once that’s done, resubmit your sitemap to Google.

Fourth, check to see if there is any content on these pages that could be seen as duplicate content. If there is, then make the necessary changes and resubmit your sitemap.

Finally, if none of these solutions work, then you may need to wait it out. Sometimes it takes Google a while to index new pages

What are some common causes of this error?

There are a number of potential causes for this error, but some of the most common include:

-Incorrect or outdated robots.txt file: If your site’s robots.txt file is incorrect or out of date, it can prevent Google from being able to crawl and index your content.

-Noindex tag: If you have a noindex tag on your site, it will tell Google not to index your content.

-Low quality content: Google may not index your content if it is low quality or thin in nature.

-Slow website: A slow website can also prevent Google from being able to crawl and index your content properly.

Troubleshooting tips

If you’re noticing that some of your pages are being crawled by Google but not appearing in the search results, there are a few potential causes and solutions to check:

1. Make sure your pages are being served correctly. If Googlebot is receiving an error when trying to crawl your page (e.g., a 404 Not Found error), that page won’t be indexed. Check your server logs to see if there are any errors being returned for pages that aren’t appearing in the search results.

2. Check whether the pages are blocked by robots.txt. If your robots.txt file is blocking Googlebot from crawling your pages, they won’t be indexed. Review your robots.txt file and make sure it isn’t inadvertently blocking any of your pages from being crawled and indexed.

3. Inspect the page source for noindex tags or directives. If a page has a noindex tag or meta Robots directive, it won’t be indexed even if it’s accessible to Googlebot. Check the source code of your pages to see if there are any noindex tags or directives that might be preventing them from being indexed.

4. Make sure the pages aren’t too new or recently updated. It can take some time for newly published or updated pages to be indexed by Google (especially if they don’t have many other links pointing to them). If you just published or made significant changes to a page, give it some time before checking to see if it’s

Conclusion

There are a number of potential reasons why your pages are being crawled but not indexed by Google. The most likely reason is that your site is new and Google hasn’t had time to fully index it yet. However, there are a few other potential causes, like using robots.txt to block Google from crawling your site or having duplicate content on your site. If you’re unsure why your pages aren’t being indexed, the best course of action is to reach out to a qualified SEO professional for help.

If you want to lean about new technologies visit this site.